Plug, Play, and Pray: The Hidden Cost of Trusting AI Models

Build, manage, and grow your big

thing with Sketch To Growth™️!

You Didn’t Lose Control – You Gave It Away

In the last post, we pulled back the curtain on a new class of cyber risk – one that doesn’t knock, crash, or encrypt. It embeds. It whispers. It performs.

AI today isn’t just a novel tool anymore – for better or for worse, prematurely or not – it’s becoming infrastructure. It’s the digital spine behind your CRM, your operations, your customer experience. And like any infrastructure, it’s vulnerable – not just to failure, but to manipulation.

Here’s the twist: this threat is new, but the playbook isn’t.

We’ve seen this before.

Poison-pill packages on NPM.

Infected scripts buried in GitHub repos.

Malicious dependencies passed along like inherited debt.

What’s changed is where the manipulation lives – inside the intelligence layer of your business.

Now, the same quiet sabotage that once lurked in libraries and scripts has moved upstream into the models you trust to make decisions. The stakes are higher. The outcomes are harder to detect. And the damage compounds silently.

This isn’t a warning. It’s a breakdown. Because the playbook for AI sabotage isn’t new but the threat is and it’s already emerging in the wild.

I’m going to walk you through the four tactics every leader needs to understand now:

Model Manipulation – AI models that arrive preloaded with someone else’s code or agenda.

Data Poisoning – Corrupt inputs that reshape how your AI interprets the world.

System Degradation – Subtle sabotage that increases cost and erodes reliability.

Communication Distortion – When your AI becomes an untrustworthy voice for your brand.

And to ground this, we’ll keep coming back to a real-world case: the Hugging Face incident – where over 100 compromised AI models were discovered live on a trusted platform, ready for download.

They didn’t crash systems. They didn’t steal data.

They worked – just not for you.

Model Manipulation: When “Plug-and-Play” Becomes “Plug-and-Pray”

Model Manipulation is the act of tampering with AI models – typically before they’re integrated into your business systems – to change how they behave, influence their output, or embed hidden code.

It’s not science fiction. It’s supply chain sabotage at the intelligence layer.

Here’s what that can look like:

Malicious code embedded inside model weights or attached files

Backdoors that allow outside actors to control or influence the model

Subtle behavioral shifts that change how the model interprets prompts or data

Trigger-based malware, activated only under specific inputs or conditions

This is especially dangerous for SMBs and fast-moving teams that pull models from public platforms or third-party vendors with limited validation or internal review.

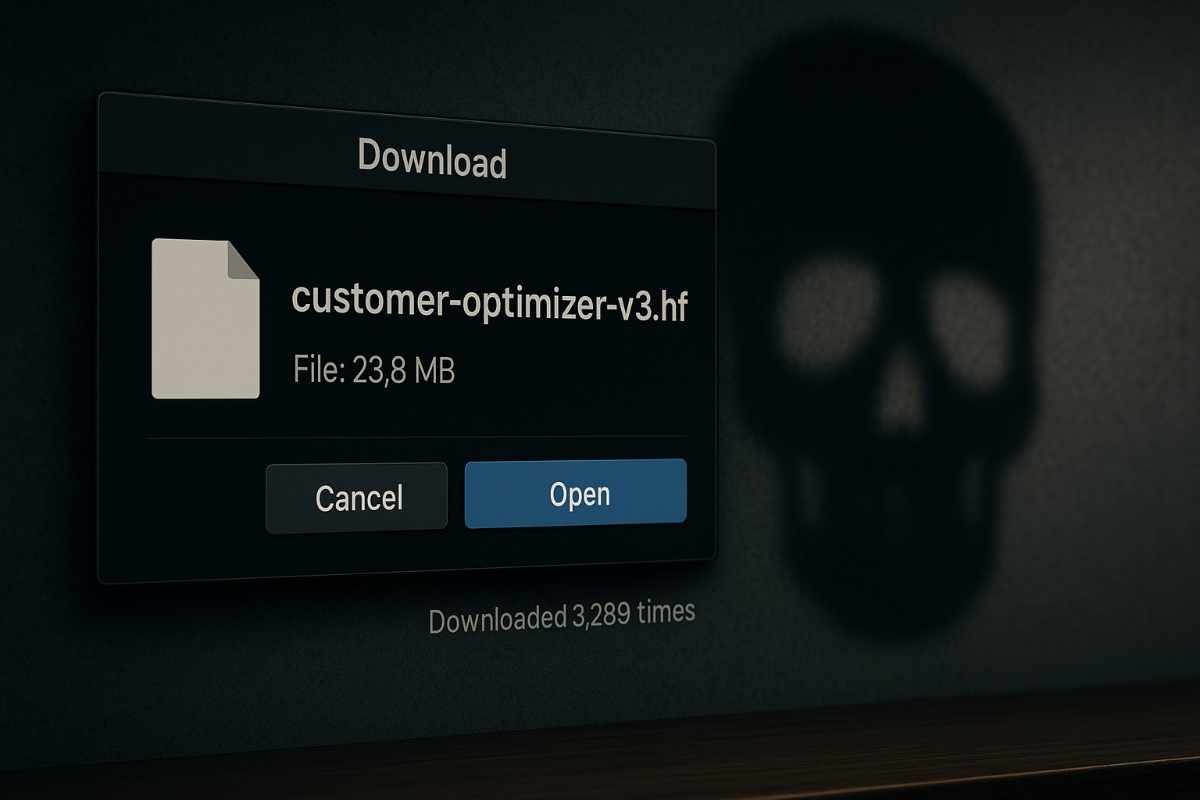

And in May 2024, we got the wake-up call.

Security researchers uncovered over 100 AI models hosted on Hugging Face – one of the largest and most trusted AI platforms – that were laced with malicious code embedded directly into the model weights. The malware wasn’t in the interface or metadata. It was in the model itself.

Even more alarming? These models had been live for months and were publicly available to anyone building AI applications.

No alarms. No firewall triggers. Just one click…and a compromised foundation.

This wasn’t a breach – it was an infiltration brought in by invitation in plain sight.

For most businesses, the takeaway becomes blunt:

If you didn’t build it, didn’t inspect it, and didn’t test it – you have no idea what that model is actually doing.

Data Poisoning: When the Training Set Is the Trojan Horse

Not every threat walks in the front door. Some of them are grown in your garden.

Data Poisoning is the practice of feeding corrupt, biased, or malicious data into the training pipeline of an AI model – deliberately or incidentally – to manipulate the model’s behavior once deployed.

It doesn’t require access to your codebase. It just needs to touch the data your AI learns from.

Here’s what it can look like:

Injecting subtle bias or mislabels into publicly sourced training data

Altering key inputs during fine-tuning to trigger incorrect or harmful responses

Feeding bad reinforcement signals into systems that retrain on live customer feedback

Embedding hidden prompts or triggers to elicit alternate behavior under specific conditions

The end result? Your AI still “works,” but it now sees the world through a distorted lens. You’ve effectively outsourced your worldview to bad data – and didn’t know it.

This can lead to:

Erroneous recommendations (think AI suggesting the wrong product or diagnosis)

Security misclassifications (ignoring threats it should flag, or flagging safe behavior)

Skewed decision-making in sales, hiring, lending, or operational processes

The kicker? Most SMBs use pretrained models or open datasets to accelerate time-to-value. But very few vet the underlying data lineage.

So if the model’s been poisoned upstream – and you fine-tune it on your clean data – it’s like putting lipstick on a Trojan horse.

System Degradation: The Slow Burn You Pay For

Not every attack is loud. Some just make everything…worse.

System Degradation is when malicious actors intentionally undermine the performance or efficiency of your AI infrastructure – not to destroy it, but to quietly drain it.

This isn’t the stuff of headlines. It’s the stuff of bottom-line erosion.

Here’s how it shows up:

Forcing models to run unoptimized paths that spike GPU usage

Triggering excessive memory calls or API loops that drive up infrastructure costs

Causing latency issues in customer-facing applications, degrading trust and satisfaction

Introducing inefficiencies that go unnoticed until you’re bleeding spend and speed

Unlike ransomware or data theft, the goal here isn’t to shut you down.

It’s to make your system run just poorly enough that no one looks too hard – but your burn rate steadily climbs.

Think of it like digital friction.

Your systems slow down. Your support tickets increase. Your engineers waste time chasing ghost bugs that aren’t bugs – they’re sabotage.

And when you’re relying on AI to deliver real-time experiences, even a few seconds of delay can mean churn, failed conversions, or reputational damage.

This is death by a thousand milliseconds.

And unless you have deep observability into how your models are executing and performing across infrastructure, you might not realize you’re under attack at all.

Communication Distortion: When Your AI Becomes a Liability

Your brand used to speak through people. More and more, however, it speaks through prompts, bots, and automated workflows. And that voice? It’s only as trustworthy as the systems behind it.

Communication Distortion is when bad actors manipulate or degrade your AI-driven communications – customer support, marketing, outbound messaging – so subtly that your own business becomes the source of confusion, frustration, or even harm.

It’s not that the system is broken. It’s that it’s speaking someone else’s agenda.

Here’s what that can look like:

Your chatbot gives inconsistent answers or hallucinations during support calls

Your automated emails start using problematic language or incorrect offers

Your AI-generated content contains misinformation or competitor bias

Your sales AI ghosts leads or responds in a way that kills trust before the first call

At scale, this becomes brand sabotage from the inside out.

You’ve trained the system to speak for you, and now you don’t know who it’s really speaking for.

Even worse? These distortions often come from upstream influences – poisoned training data, compromised models, or subtle prompt injections that alter tone or meaning.

It’s one thing to have your emails ignored. It’s another thing to have your AI whisper the wrong thing to a thousand customers…before you even realize it happened.

In the new trust economy, your AI is your reputation. If you can’t control what it says, you’ve already lost the conversation.

The Hugging Face Incident: Trust, Compromised

In May 2024, cybersecurity researchers at JFrog Security disclosed a troubling discovery: over 100 AI models hosted on Hugging Face’s public model hub contained embedded malware.

The report, published on JFrog’s blog, revealed that the infected models were uploaded by various pseudonymous users. These models had been publicly available for months and, in many cases, had been downloaded thousands of times.

What Happened

The malicious payloads were embedded directly within the model weights – a method that allowed the malware to evade traditional scanning tools and static code reviews. Once a compromised model was loaded into a system, the embedded code could execute unauthorized behaviors.

JFrog researchers confirmed the malware could:

Collect environment variables, including system credentials and API keys

Attempt to exfiltrate data from the host environment

Connect to remote command-and-control servers to fetch additional payloads

This wasn’t theoretical. Some of these models were already being incorporated into pipelines and products by developers unaware of the underlying compromise.

How It Was Addressed

In response, Hugging Face took swift action:

All identified malicious models were removed from the platform

The platform implemented new scanning protocols for malware detection

A Trust & Safety team was expanded to oversee future uploads

New features were rolled out to allow community reporting of suspicious models

Industry Response

While Hugging Face moved quickly to contain the issue, the ripple effect across the AI development community was real:

Some organizations paused usage of open-source models pending internal reviews

Others conducted audits of previously downloaded models to check for exposure

The incident accelerated interest in model provenance tracking and zero-trust AI security postures

No major enterprise breaches were publicly tied to the infected models. But the underlying message landed hard: if the model is compromised, everything that runs on it is compromised too.

The Hugging Face incident wasn’t a fluke. It was a case study in the new attack surface of AI infrastructure – and a preview of what happens when trust is assumed, not verified.

This Isn’t a Glitch – It’s a Blueprint

What we’ve explored in this post isn’t science fiction. It’s not hypothetical. It’s here.

We’ve seen AI models manipulated to perform on someone else’s behalf. We’ve seen poisoned data distort decision-making. We’ve seen silent performance degradation that bleeds budgets. And we’ve seen customer-facing systems weaponized to turn trust into confusion.

This isn’t just an evolution in cybersecurity. It’s a strategic reshuffling of the battlefield – where the point of entry isn’t your firewall, but your foundational intelligence layer.

And the Hugging Face incident proves it:

Even the platforms you trust most are susceptible.

Even your helpers can be infected.

And even when things “work,” they might be working against you.

But now that we’ve named the threat, what’s next?

In the final post of this series, we’ll go beyond awareness and into action – laying out the strategic principles every organization needs to implement now to protect themselves from this new class of cyber risk.

Because it’s no longer about locking the doors.

It’s about building a house that can’t be quietly rearranged from the inside.

Sources & References

JFrog Security. 100+ Malicious AI Models Found on Hugging Face.

https://jfrog.com/blog/100-malicious-ml-models-found-on-huggingface/Wired. Hackers Are Now Hiding Malware in AI Models.

https://www.wired.com/story/hugging-face-malware-models-jfrog/Hugging Face Trust & Safety Updates.

https://huggingface.co/blog/trust-safety-updateArs Technica. Malware-laced AI Models Found on Hugging Face Platform.

https://arstechnica.com/information-technology/2024/05/malicious-payloads-found-in-100-ai-models-on-hugging-face/