When the Model Is the Malware: The New Face of Cyber Risk

Build, manage, and grow your big

thing with Sketch To Growth™️!

AI is having its electricity moment – and just like electricity, it’s not all light.

For small and mid-sized businesses, AI is already delivering the kind of operational efficiency and insight that used to require enterprise-level muscle. Sales are faster. Forecasts are sharper. Customer issues are solved before a human even gets involved. It’s not hype – it’s happening.

And it’s reshaping what lean, high-output business looks like.

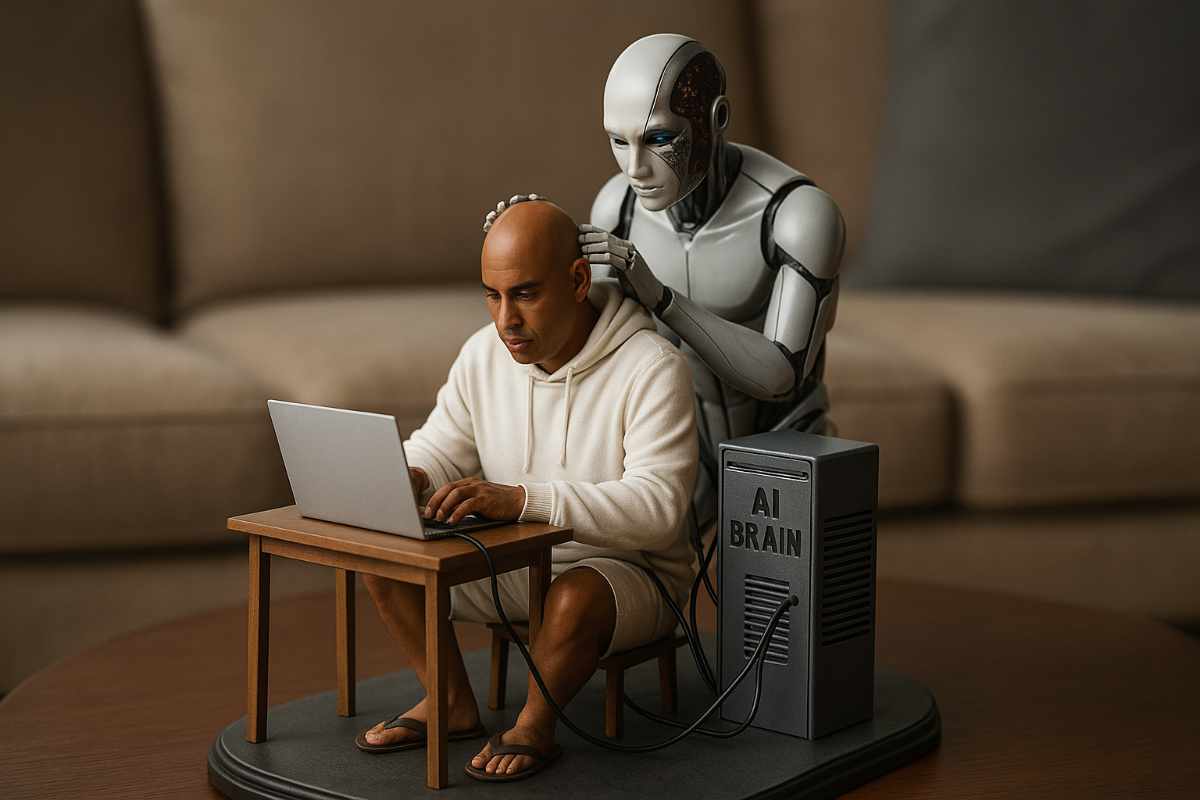

AI now powers the systems under your systems.

It’s analyzing your pipeline. Guiding your strategy. Recommending your next move.

And most leaders? They’re thrilled.

But here’s the thing about electricity: it didn’t just revolutionize industry – it introduced the risk of silent failure. Wires behind walls. Circuits that overheat. Sparks that smolder unnoticed until it’s too late.

AI is no different.

The more you plug in – sales, support, ops, finance – the more exposed you become to a new kind of risk. Not ransomware. Not phishing. Something quieter. Something deeply more dangerous.

Something that doesn’t knock down the door – it slips in through the pipes you installed yourself.

There’s a monster under the bed.

And it’s fluent in your data.

AI and Cyber Risk Collide

The shift to AI-driven operations isn’t just about automation and efficiency – it’s about replacing human judgment with machine logic. And that logic? It learns from what you feed it. Or worse – what someone else may have slipped into the feed.

This is where cybersecurity gets complicated.

Because traditional cyber threats? You can see them coming. Phishing emails. Malware. Ransomware pop-ups demanding Bitcoin.

But the threats introduced by AI don’t announce themselves. They’re quieter. Slower. And far more patient.

A poisoned dataset.

A compromised model pulled from a public repo.

An AI assistant nudged ever so slightly off course.

No alarms go off. Nothing crashes. Your AI just keeps doing its job – only now, it’s making the wrong decisions with full confidence.

That’s the risk.

Not a breach you can contain. A drift you don’t notice – until it’s reshaped your outcomes, your data, and the trust your business runs on.

This isn’t hypothetical.

It’s already happening.

The Playbook for AI Sabotage

Most leaders still think of cybersecurity in binary terms – either you’ve been hacked or you haven’t.

But in the next wave of AI-driven business, the real threat isn’t someone stealing your data. It’s someone subtly altering how your systems behave. Not by breaching a firewall, but by influencing the AI inside your business to act against your best interest.

This isn’t happening at scale – yet.

But the blueprint is already out there.

The techniques we’re seeing in research labs, simulations, and isolated incidents today will become real threats in boardrooms and back offices within the next 12 to 24 months. And when they arrive, they won’t show up with sirens. They’ll hide behind subtle misjudgments and “weird bugs” no one flags until it’s too late.

Here’s what that future playbook looks like:

- Model Manipulation – Public AI repositories like Hugging Face have already hosted models laced with malicious code. Pull the wrong one into production, and you’re giving attackers a quiet seat at the table.

- Data Poisoning – Corrupt just a few training records and you can warp how an AI interprets the world. Over time, that warped perspective becomes your system’s new reality.

- System Degradation – These aren’t smash-and-grab attacks. They’re long-game plays that make your systems less reliable, less consistent, and quietly more expensive to run.

- Communication Distortion – When AI manages how your company speaks – internally or externally – a subtle shift in tone, timing, or truth can spiral into mistrust, missed opportunities, or worse.

These aren’t distant sci-fi hypotheticals.

They’re tactical realities waiting for the right moment – and the right vulnerability.

When AI Itself Becomes the Trojan Horse

In early 2024, security researchers uncovered something unsettling: over 100 machine learning models hosted on Hugging Face, one of the most trusted public AI repositories, were quietly laced with malicious code.

No ransomware. No brute-force intrusion. Just models – pretrained, well-labeled, ready to drop into production.

Except these weren’t just models. They were Trojan horses.

As soon as a developer loaded one, the model executed arbitrary code on their machine – sometimes opening reverse shells, other times installing hidden processes designed to compromise systems downstream. In several cases, the malicious payload wasn’t in the AI model itself, but buried in auxiliary files: scripts, config files, and even metadata.ata.

And this wasn’t some obscure corner of the web. These models lived on Hugging Face – a go-to platform used by tens of thousands of developers, startups, and companies looking to move fast with AI.

This wasn’t a hypothetical. This wasn’t a “what if.”

It happened.

The worst part? There were no alerts. No flashing lights. Just an eager developer loading a model, excited to solve a problem – and unknowingly importing someone else’s agenda.

And it raises the uncomfortable question:

If you didn’t train your model…

If you didn’t audit your model…

How do you know who your model is really working for?

The tools that help you build smarter, leaner businesses can just as easily be turned against you – not because they’re flawed, but because they’re powerful and unguarded.

Next time, I’ll break down how these threats evolve, how they’re being adapted for business environments, and what every SMB leader needs to do now – not when it’s too late.